Although guidance related to the use of Generative Artificial Intelligence (AI) tools in an academic setting has been provided by many of our partner academic institutions, that guidance has been focused on student and instructor usage and may not be directly applicable to research staff, scientists and trainees engaged in research. As we see increasing reports of research teams’ use of tools such as ChatGPT to support their work, the WHRI aims to provide initial support to investigators to initiate conversations. On October 18th our CW Digital Health Research Manager, Dr. Beth Payne, held a Lunch & Learn with PHSA Director, Research Integration and Innovation, Dr. Holly Longstaff. The event was focused on individuals’ use of AI in their research role, and the event enabled consultation and feedback from the CW research community on their current usages and desired supports around how to manage AI use among their teams.

As a first step in response to the Lunch & Learn discussion, we are reaching out with some general information and potential questions to support investigators, trainees and research teams in their conversations regarding appropriate use, and transparency of use, of generative artificial intelligence. We recognize that the AI field is dynamic and quickly advancing. To ensure the research institutes remain informed and are effectively using these advanced technologies, our planned next steps are 1) develop staff-specific guidance on use of AI at work, and 2) convene an AI in the Research Workplace Working Group for WHRI and BCCHR Researchers, Trainees, and Staff to inform guidance and keep us up to date on recent advances in the AI field.

What is Generative Artificial Intelligence?

Generative AI is a form of AI that primarily uses machine learning algorithms to generate text, images or sound. Generative AI tools can be used for a variety of purposes such as summarizing information in plain language, reducing the word count of text, editing text for grammatical errors, and more.

Why does this matter in a research setting?

There is general consensus among users that generative AI tools, such as ChatGPT, Grammerly, and GitHubPilot, can create efficiencies in the workplace; however, there are particular and unique considerations for a research setting. There are many known limitations, which include plagiarism, general inaccuracies with output, and hallucinations, which can be described as creating plausible sounding citations and facts that are not real.

What can you do now?

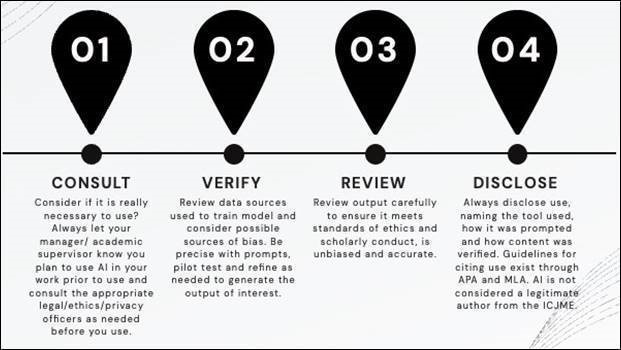

As we move to develop our additional guidance and working group, you can initiate conversations about generative AI with your research team. Table 1 provides questions and considerations to assist you in informed decision-making regarding use of generative AI in a research setting. It is intended to support responsible use of generative AI by research institute members, trainees and staff. It is adapted from Government of Canada Guidance1 and several other guidance notes2,3,4. We encourage you to talk to your staff and trainees about their current and planned use of generative AI at work. This guidance is based on four general steps (Figure 1), in approaching use of AI in a research setting, consult, verify, review and disclose.

Figure 1. Steps to approaching AI use in a research setting

For best experience please view with a tablet or computer.

Table 1. Stepwise Table for use of generative AI in a research setting

| CONSIDERATION | ACTION |

| Step 1: Consult | |

|

|

|

|

|

|

| Step 2: Verify | |

|

|

|

|

| Step 3: Review | |

|

|

| Step 4: Disclose | |

|

|

We welcome your feedback on this information, any relevant resources you would like to share, as well as, how we can best support you and your research team in this evolving landscape. Please contact Beth Payne Beth.Payne@cw.bc.ca with any questions or feedback, and Kathryn Dewar KDewar@cw.bc.ca regarding concerns of AI use.

References:

- Gouvernement du Canada. (2023, September 6). Government of Canada. Canada.ca. https://www.canada.ca/en/government/system/digital-government/digital-government-innovations/responsible-use-ai/guide-use-generative-ai.html

- Dwivedi, Y. k, Kshetri, N., Hughes, L., Slade, E., Jeyaraj, A., e, f, g, h, i, j, k, 1, l, m, n, o, p, q, … tools, A. artificially intelligent. (2023, March 11). Opinion paper: “so what if chatgpt wrote it?” multidisciplinary perspectives on opportunities, challenges and implications of Generative Conversational AI for Research, practice and policy. International Journal of Information Management. https://www.sciencedirect.com/science/article/pii/S0268401223000233

- Guidance on the appropriate use of Generative Artificial Intelligence in graduate theses. Guidance on the Appropriate Use of Generative Artificial Intelligence in Graduate Theses – School of Graduate Studies. (n.d.). https://www.sgs.utoronto.ca/about/guidance-on-the-use-of-generative-artificial-intelligence

UBC guidance. Generative AI. (2023, October 30). https://genai.ubc.ca/guidance/